Computer Vision — Weeks 4, 5, 6

Hey! My name’s Michel Liao. I’m a computer science first-year at Princeton University, hoping to get a PhD in computer vision. Join me in my CV journey!

GitHub: https://github.com/Michel-Liao

Videos/Lectures

This video by Sebastian Raschka helped with implementing cross-correlation and understanding its difference with convolution.

This video on template matching by Professor Shree Nayar helped with implementing normalized cross-correlation.

I’m working on this optical flow series by Prof. Nayar.

Mini Projects

I finished implementing cross-correlation and normalized cross-correlation from scratch. Check out the code on my GitHub. (This implementation came from an assignment by Erich Liang.)

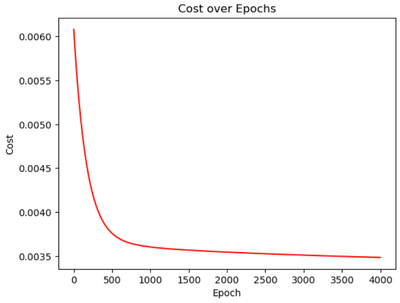

Also, I implemented multiple linear regression from scratch. Check out my blog post on the implementation and my code!

Course Progress

Finished Coursera’s Advanced Learning Algorithms. You can find my notes here!

Now, I’m on their unsupervised learning course.

Paper of the Week

Nothing these weeks. Postponing paper reading until I understand the fundamentals better.

Insights

- Cross-correlation is like a dot product between an image segment and a filter. Convolution is the same thing but the image segment matrix is rotated 180 degrees.

- Normalized cross-correlation is much better for template matching than regular cross-correlation. Normalizing lets you ignore differences in pixel intensities between the two images you’re comparing.

- The output of

torch.nn.Unfold()is much easier to understand if you draw out a 4x4 image segment (elements [0, 15]) and you draw the 3x3 kernels. You’ll notice that the columns correspond with a 3x3 kernel! Here’s some code that illustrates the unfold operation written by Erich Liang. - Breakpoints are super helpful for understanding what’s going on in your code! In some cases, it’s much better than print statements for debugging.

- A lot of machine learning is just brute-forcing things. For example, one decision tree is highly susceptible to small changes in its training dataset. The solution: Random Forest Algorithm that trains a bunch of decision trees and has them “vote” on the right label.

- We gain a lot of insight from human learning. The XGBoost Algorithm is random foresting but you emphasize the training examples your model is bad at labeling. It’s just like practicing your weaknesses.

- Implementing something from scratch really helps with understanding. Don’t just “know” the theory. Put it into practice!

Going Forward

I’m planning to finish the unsupervised learning course this week. Hopefully I can finish the new assignment I got on homography, but we’ll see.

Happy New Year!! I hope it’s a great one :)

No spam, no sharing to third party. Only you and me.

Member discussion