Computer Vision - Week 2

Hey! My name’s Michel Liao. I’m a computer science first-year at Princeton University. I hope to get a Ph.D. in computer vision, publishing meaningful research along the way. Join me in my CV journey!

Github: https://github.com/Michel-Liao

Course Progress

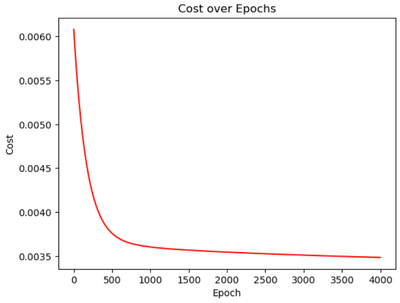

Finished Coursera’s Supervised Machine Learning: Regression and Classification! Want my notes? Click here.

Paper of the Week

“Gradient-Based Learning Applied to Document Recognition”

I was hoping to finish this paper by this week, but the advent of finals preparation has substantially slowed my progress. Hopefully next week, but it’s looking more like two weeks.

Videos/Lectures

No lectures this week! I’m starting to learn about cross-correlation and convolution with this video and these slides.

Assignments

This assignment was given by Erich Liang.

I’ve finished the first two scripts for the second assignment. In the first, I use np.transpose() to change the shape of an array. In the second, I use NumPy, PIL, and matplotlib to operate on a NumPy array to convert an image to grayscale. Check them out on my GitHub!

Insights

- The decision boundary in logistic regression depends on what value

zsatisfies the probability you want. We interpretg(z)(the sigmoid function) to be the probability that examplexhas label1. A common threshold for deciding if the algorithm classifiesxas having label1isg(z) = 0.5, so we find that the equation for the decision boundary satisfiesz = 0(by looking at the sigmoid function). - The implementation for logistic regression is basically the same as linear regression, but

f_wbis different in both cases. For logistic regression,f_wb = g(z)wheregis the sigmoid function. For linear regression,f_wb = np.dot(w, x) + b, wherewandxare vectors of lengthn(number of features), housing the parameters and features, respectively, andbis another parameter. - Although linear regression seems very elementary, it actually is very useful. It’s not just learning how to find a best fit line. However, gradient descent isn’t always the fastest way to find a best fit line, especially in the linear case where there are generalized solutions.

- The answer to a lot of ML problems seems to be “get more training data.”

- ChatGPT is really helpful for answering fundamental coding questions, like how

np.transpose()works. The official NumPy documentation wasn’t great.

Questions

- What types of functions are neural networks best at finding a solution to?

- What is generative AI and how does that apply to computer vision?

- How do you choose the threshold for

zin logistic regression? When is a decision boundary corresponding toz = 0not good enough?

Going Forward

My focus now is on the second course in the Machine Learning Specialization, Advanced Learning Algorithms (neural networks and decision trees). My second priority is working on the second assignment which has me learning PyTorch. If I have extra time, I’ll read the LeNet paper linked above.

I’m excited to get into neural networks and PyTorch!

No spam, no sharing to third party. Only you and me.

Member discussion